The Subprime Data Crisis

Tearing down and rebuilding the data foundation society is built on

CLEAN DATADATA AGENCY

Michael James Munson

9/22/202511 min read

Original Publication Date: July 18, 2025 on Marketing Accountability Council

In the week of July 1, 2025 there was a poll released that indicated 48% of Americans were aware of a budget bill going through Congress. After factoring the number of people who both knew of - and about - the bill’s contents, only an estimated 24% of Americans knew how this bill will fundamentally shape their lives.

How do we get to a point like this, where so many people are so shockingly uninformed about what people writing laws that dictate their lives, are actually doing to impact them? This 76% of Americans who were clueless about the bill wouldn’t let someone steal 20% of their money out of their bank account, but they were blissfully unaware the bill they knew nothing about will reduce their Social Security payments by 20%, starting in 2032.

We live in a world with technology that allows hyper-democratization, the ability to be informed at a level and scale that has never existed in history. Yet, despite the fact we consume more data than ever before, we have the most ignorant society we have ever had. Why? It’s by design. Those with a vested interest in controlling others and holding on to their power know an informed population is a threat. This is why DATA is the coin of our modern digital realm.

The quality of data we build our society on, and the means by which we share it, determines everything in our lives, particularly how resources are allocated. By all measures (quality, trust, legal, ethical), that foundation is now compromised and unable to sustain what it is being counted on to support. The cracks are everywhere. And now the same people who gave us the Big Tech business model that has left us with such a weak foundation, are allocating untold billions of dollars developing artificial intelligence (designed to replace us, drive up our energy cost, and create even more inequality, when we didn’t ask for it), to add on to this “Rube Goldberg Machine” of a structure that is suppose to support humanity.

It all means society is at risk of collapse if nothing is done to strengthen its foundation. Enter a startup company teaming up with the MAC to rebuild it with a much stronger and ethical data structure, one that enables collaborative data sharing and rejects top-down directives. It’s done by changing our relationship to data, replacing the paradigm that has left us so vulnerable, and no longer trusting people who gave us the failed data foundation in the first place.

Strap in, folks. This is about to get interesting…

The data context

Let’s start with the cliché that won’t die: “Data is the new oil.”

It’s not wrong. Data does power the modern economy. But unlike oil, data’s value isn’t in how much you extract, it’s in how accurately, ethically, and consensually it’s used. And that’s where the comparison collapses.

Data’s worth is entirely dependent on quality and ownership. And both are in shambles.

Let’s look at the state of play:

$3.1 trillion: That’s the estimated cost of poor data quality to U.S. businesses annually, according to research by IBM. The average company loses $15 million annually due to poor inputs, including errors, inefficiencies, and missed opportunities.

27% of employee time is spent just fixing broken data. That’s over a quarter of human capital down the drain for damage control.

According to Gartner and Experian reports, bad or stolen data corrodes customer trust, warps analytics, stifles innovation, and creates cascading compliance risks for businesses and institutions alike.

But this isn’t just a business problem, it’s a systemic one. When inaccurate or unauthorized data is the foundation for major policy, medical research, insurance modeling, AI development, and media coverage, the effects become societal:

Decisions get made on false premises.

Entire populations are misrepresented or ignored.

Privacy is breached and weaponized.

Trust in institutions erodes.

Innovation stalls because the signals are garbage.

And here's the kicker: when people feel surveilled, exploited, or kept in the dark about how their data is used, they retreat. Fewer people share data. The data pool becomes thinner, less diverse, and less reliable. The vicious cycle accelerates.

Meanwhile, the companies profiting off this chaos have no incentive to clean it up. They collect detailed behavioral data to sell to advertisers—accurate enough to manipulate you, but not accurate enough to empower you. And they certainly aren’t losing sleep over whether you have access to the quality of information they use to target you.

This asymmetry is the root of the problem: a data economy where value extraction is prioritized over truth, permission, and dignity.

We’re not just in an era of misinformation. We’re living through a sub-prime data crisis, where everything downstream is being built on corrupted, consentless, low-integrity information. And like the sub-prime mortgage crisis before it, this foundation will not hold.

The solution starts with flipping the model—from take without consent to collaborate with permission. From data as a product to data as a partnership. That’s what’s next.

What is data anyway?

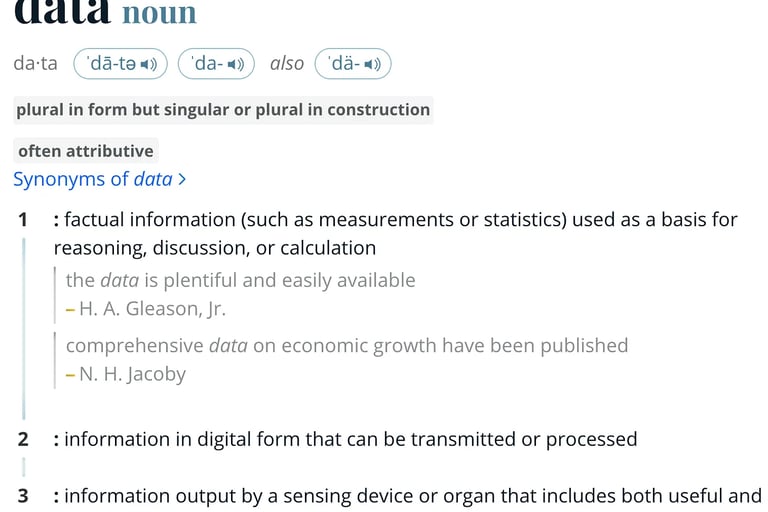

Here’s a screenshot of the Merriam-Webster definition of data:

It’s very easy to confuse data with “facts.” I mean look at the freaking definition. It refers to data being factual information, but also just information - factual or not - in digital form, and then reverts back to outputs from devices as factual (if configured to be accurate). When data doesn’t mean facts, and we make decisions based on data, we have potential for two very big problems that feed on each other:

Decisions based on poor or inaccurate data harm both organizations and individuals.

Bad data leads to flawed strategies, poor choices, wasted resources, lost opportunities, compliance issues, and diminished trust, with consequences ranging from financial loss to damaged reputations and missed opportunities for innovation or advancement.

If people fear their data will be misused, breached, or sold without their consent, they are less likely to share it.

Any reluctance to share data - driven by concerns over privacy, exploitation, and loss of control - reduces the overall quality, completeness, and representativeness of available data. When fewer people share it, the data that remains becomes less reliable, increasing the risk of making decisions based on incomplete or biased information, which perpetuates the cycle of poor outcomes.

As it relates to data about us being shared as individuals, we fear it being attached to our personally identity. I’ve never used 23 and Me or Ancestry DNA tests because there is no way in hell I want the possibility of these companies selling my data to some 3rd party, who deduces I’m going to get a serious disease next week, and it resulting in insurance companies jacking up my health insurance cost, or if certain laws are changed, allows the medical industrial complex to deny me any health insurance coverage at all due to a pre-existing condition! I don’t even use an Apple Watch for similar reasons. Apple can tout its data security, but no system is full-proof. Heaven help us if there is a data breach of Apple Health data. I don’t have a Meta or Linked in, or Prime account either. I trust nobody with my data.

The Big Tech business model needs to die

One of the biggest myths about the internet is that it’s free to use. While many websites and services don’t charge users directly, there is a cost. Instead of paying with money, we pay with our personal information, and attention (our time) that they capture and monetize by selling ads put in front of us to see, against our wishes.

With social media, the stakes are even higher. People share personal details that can be linked to their identities. This data allows platforms to deliver highly targeted content and ads, sometimes crafted to influence our opinions and behaviors in ways that can undermine our autonomy.

This system of constant tracking and content tailoring creates a feedback loop: the more we engage, the more content is optimized to capture our attention, maximizing ad exposure and profit for the platform at the expense of our privacy and free will.

Companies like Google and Meta have dominated by adhering to this business model that makes you the product for advertisers.

But here’s the thing. These companies place such a premium on providing their customers accurate data about you, that they collect it without you being aware (often not even on their own web pages), but they have no incentive or inclination to make sure you get quality data and have accurate information on which to make your decisions. You are at the mercy of whatever their platforms display in their interest.

How is it equitable for these big companies to catalogue your behavior in an effort to keep you on their screen and provide accurate data to their customers to exploit you, but show absolutely zero interest making sure you get only quality data on which to make your decisions? And how about you getting paid for your data?

Most online information sources are designed to maximize user engagement and data collection, operating under the principle that more data leads to more views and higher engagement. This incentive structure has contributed to an internet crowded with fake content and bots that generate artificial engagement. Social media bots are programmed to mimic human behavior, inflating metrics like likes, shares, and comments to create a false sense of popularity and activity. This artificial engagement not only distorts what content appears popular but also makes it harder for users to distinguish genuine interactions from automated ones. - See the #1 problem from the “What is data anyway?” section above.

The “shout for attention without really listening” economy we have created is a disaster. How can we possibly accept this hit list of terrible it has given us?

Inundated with messages we don’t want to hear: The volume of unsolicited, irrelevant, or manipulative content overwhelms users, leading to information overload and fatigue.

Given a steady stream of poor data to make our decisions on: The quality of information is often compromised by misinformation, clickbait, and algorithmic bias, which hampers informed decision-making.

Unable to really trust anyone: The proliferation of fake news, bots, and manipulative content erodes trust in media, institutions, and even personal networks.

Forced to shout for our own attention: In a crowded digital space, individuals and organizations feel compelled to amplify their messages aggressively to be noticed.

Nobody thinks about long-term implications of short-term actions: This reflects the focus on immediate engagement metrics and profits at the expense of sustainable, ethical outcomes.

Algorithms prioritize engagement over truth: Platforms optimize for clicks and views, often promoting sensational or divisive content rather than balanced information.

Erosion of meaningful dialogue: Polarization as a result of unequal data quality consumed reduce opportunities for genuine understanding and empathy.

Privacy is routinely compromised: Personal data is collected and exploited without sufficient transparency or consent.

Mental health impacts: Constant exposure to overwhelming content and social comparison contributes to anxiety, stress, and other negative effects.

Economic inequality in attention: Those with more resources can dominate the attention economy, marginalizing smaller voices.

Lack of accountability: Platforms and content creators often face little consequence for spreading harmful or false information.

We ended up with this for the same reason 76% of Americans have no idea how the government is taxing and spending. But let’s not leave it on the doorstep of Americans alone. Globally, 68% of people think their country’s economy is rigged to favor the wealthy and privileged. We are here because we gave away our agency and let forces that want to manipulate us do so. It’s time to take our agency back. It all starts with with our data.

The new paradigm is Data Agency

If you think of the quality of the data foundation underpinning society as like concrete, the current paradigm is like building a foundation with the lowest strength concrete, often referred to as Controlled Low-Strength Material (CLSM) or flowable fill, designed for non-structural applications where high strength is not required. I’m fairly certain nobody thought we were building our society entirely on this lousy Big Tech data foundation when it first started being used, but that’s what happened. It was never possible for it to support the societal weight it must bear today.

We now need a data foundation that can perform like Ultra-High Performance Concrete (UHPC), which can easily bear significant stress pressures. This innovative material is known for its exceptional strength and durability, making it suitable for demanding applications akin to supporting a global society. I’m fairly certain everyone would agree we need a strong underlying structure for a digital economy.

The CLSM foundation we have, is a product of a “collect data without permission” model. The UHPC foundation we need will be a product of a “collect data with permission” model. Yes, I am talking about flipping the model here. And that’s precisely what is now being worked on.

What is Data Agency? In simple terms, it’s the capacity to control, direct, and make informed decisions about how the data you generate is used, shared, and acted upon.

Replacing the “take without permission model” with a consent-based one allows individuals to become partners with organizations (rather than products for them to exploit), granting permission for their data to be used in ways that are transparent and mutually beneficial. This approach not only restores trust and respects personal autonomy, but also offers tangible advantages for businesses, such as improved data quality, reduced compliance risks, and stronger customer loyalty. The long-term benefits of a permission-based system include a more sustainable, ethical, and innovative data ecosystem where both individuals and organizations thrive.

Anonymized data with permission > personal data without permission

Anonymized data that reveals deep insights into what people value and what moves them emotionally is often far more valuable than shallow personally identifiable information (PII) like names or addresses and even demographic data. While PII primarily serves to identify individuals, it carries significant privacy risks and must be heavily protected or removed to comply with regulations. In contrast, anonymized data - when properly processed to prevent re-identification - can capture patterns of behavior, preferences, and emotional responses without exposing anyone’s identity. This kind of data enables businesses and researchers to understand motivations, predict trends, and tailor experiences in a meaningful way, all while respecting user privacy. Because it focuses on collective insights rather than individual identities, anonymized data offers richer, ethically safer intelligence that drives better decision-making and innovation without compromising personal privacy.

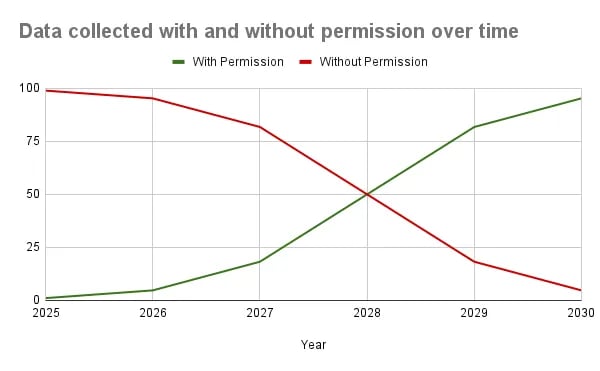

The chart above demonstrates a five-year evolution that will flip the market paradigm from the Big Tech and Data Broker business models that rely on taking and using data from us without permission, to the Data Agency model that collects data from us with permission in a spirit of collaboration. It uses the sigmoid function that shows slow initial adoption, followed by accelerated growth in the middle phase, and then a plateau as market saturation approaches. We project the crossover point to occur around 2028.

Are you with us?

A coalition of people who are simply no longer going to accept unacceptable marketing and data practices, is now coming together. The MAC coalition honestly doesn’t care what the corporate world or the “experts” think. It can follow the disastrous playbooks that erode trust and sacrifice loyalty for short term transactions. It can cling to dishonest gimmicks and underhanded tactics. We know a paradigm based on a foundation of authentic sharing, equitable value exchange, and trust - not skepticism - with a commitment to respect others - not abuse them - is a “better mousetrap” and will prevail. This is private sector warfare that determines how we run our society, not political theater.

We have the power to make the market rules. We’re building a Data Agency platform that protects your identity, allows you to control who gets your data, compensates you when it is sold, and allows you to easily share it with who you want, to do it.

The company - not even incorporated yet - is Daginty and it is our mission to be the market leader forcing this change. The company name is inspired from the words “Data,” “Agency,” and “Dignity,” with a little poetic license thrown in to make it unique sounding - Day - jin - tee.

If you want to join the coalition and lend your support for improving our data foundation by giving you tools to take agency over your personal data, welcome aboard! You’ll be hearing more about how you can get involved and support the cause in the coming weeks and months.

Finally, don’t get your head down if the weight of our broken society is making you feel powerless. Help is on the way. As South African poet June Jordan originally said, and Barack Obama famously repeated: “We are the people we have been waiting for!” We just have to come together in common cause to do it. No matter your political ideology or belief system, I think we can all agree, accurate, ethical data is an essential foundation for us all, and we all want to not just control but make sense of and be compensated for our data. If you agree, there really is no reason not to join us. It’s time to support and be supported by your fellow citizens!